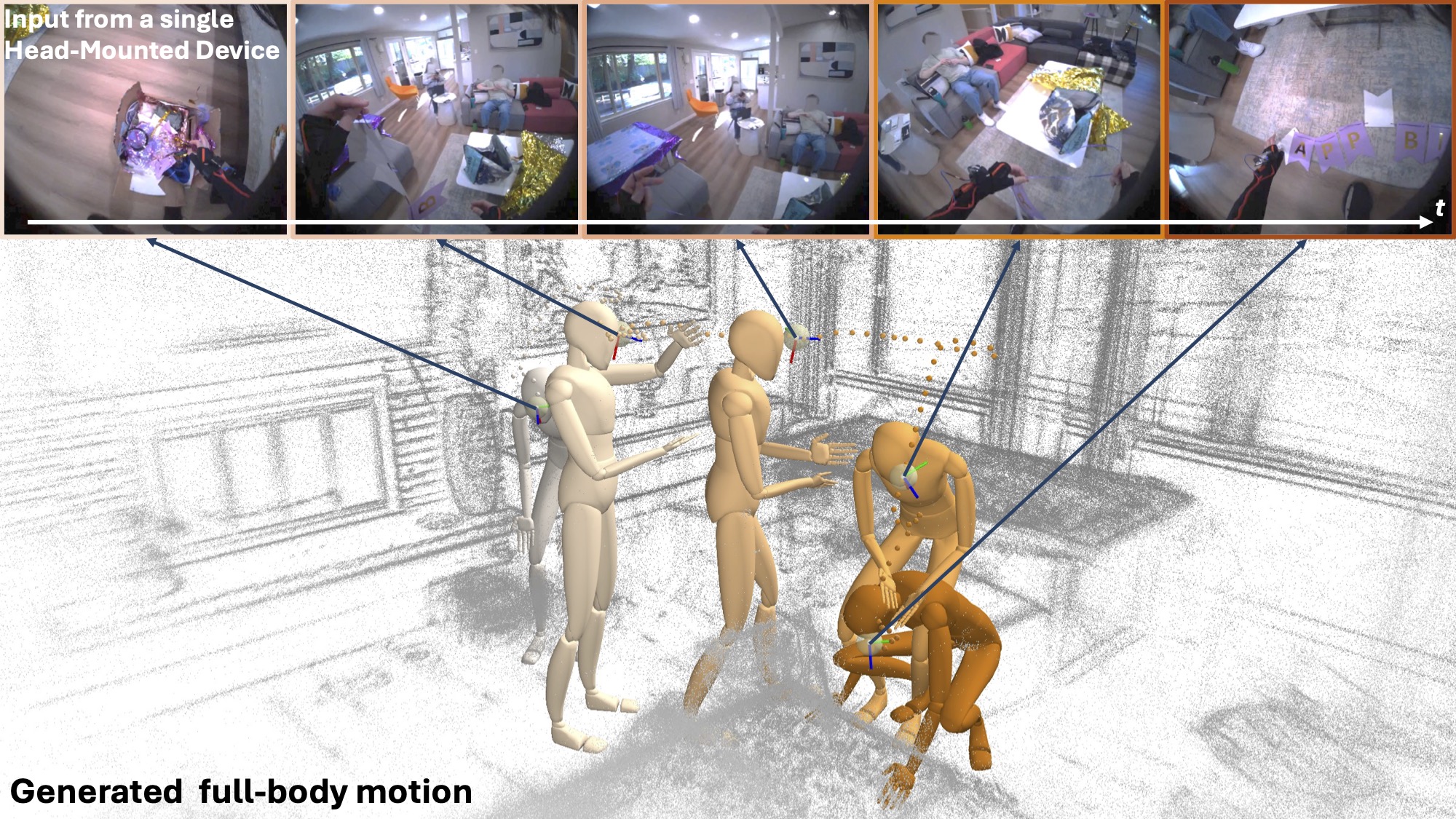

This paper investigates the online generation of realistic full-body human motion using a single head-mounted device with an outward-facing color camera and the ability to perform visual SLAM. Given the inherent ambiguity of this setup, we introduce a novel system, HMD2, designed to balance between motion reconstruction and generation.

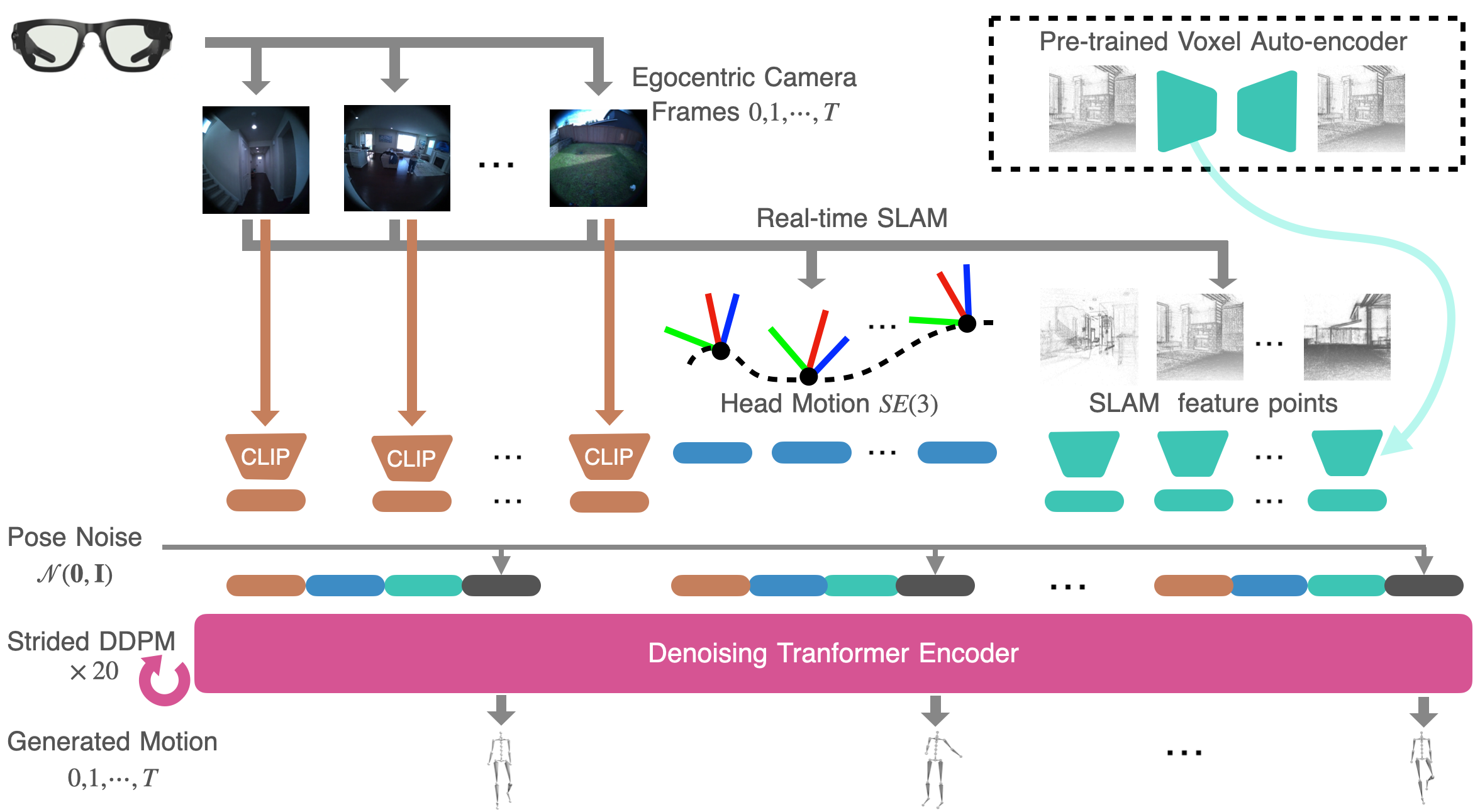

From a reconstruction standpoint, our system aims to maximally utilize the camera streams to produce both analytical and learned features, including head motion, SLAM point cloud, and image embeddings. On the generative front, HMD2 employs a multi-modal conditional motion Diffusion model, incorporating a time-series backbone to maintain temporal coherence in generated motions, and utilizes autoregressive in-painting to facilitate online motion inference with minimal latency (0.17 seconds). Collectively, we demonstrate that our system offers a highly effective and robust solution capable of scaling to an extensive dataset of over 200 hours collected in a wide range of complex indoor and outdoor environments using publicly available smart glasses.

Our system, HMD2, generates realistic full-body motion that aligns with the signals from a single head-mounted device. Using the image streams from the ego-centric camera and head trajectory with the feature cloud from the onboard SLAM system, we employ a diffusion-based framework to generate the wearer's full-body motion.

@inproceedings{guzov-jiang2025hmd2,

title = {HMD^2: Environment-aware Motion Generation from Single Egocentric Head-Mounted Device},

author = {Guzov, Vladimir and Jiang, Yifeng and Hong, Fangzhou and Pons-Moll, Gerard and Newcombe, Richard and Liu, C. Karen and Ye, Yuting and Ma, Lingni},

booktitle = {International Conference on 3D Vision (3DV)},

year = {2025},

month = {March},

}